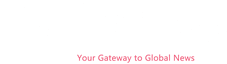

When you watch this year’s English Premier League soccer games, there’s a high chance you may get mad at some of the offside calls. However, unlike past seasons, your anger won't be because the call, or the lack thereof, was obviously lousy. That’s because the League's new offside-detection system is apparently able to spot a player's position on the field, and call them offside, with more accuracy than ever—and it’s all powered by iPhones.

The League’s rollout of this new semiautomated offside tech later in the 2024–25 season won’t just provide long-awaited placation for players and fans frustrated by years of problems with previous video-assistant referee (VAR) systems, from extensive delays and human process errors to concerns about the precision of in-game calls due to limitations of the existing technology.

Genius Sports and subsidiary Second Spectrum, known for years of optical tracking and data-based work in NBA basketball, will be debuting this smartphone-based system known internally as “Dragon.”

How Dragon Works: iPhone Cameras, Machine Intelligence, and a Digital Twin

The system utilizes dozens of iPhones, using the cameras to capture high-frame-rate video from multiple angles. Dragon’s custom machine intelligence software supposedly allows the smartphones to effectively communicate and work together to process all the visual data collected by the multiple cameras.

What’s more, in addition to its use in soccer games, it could also serve as a driver of new motion-capture and artificial-intelligence models across many other sports. WIRED obtained exclusive access to Dragon’s development and imminent deployment in the EPL.

The Evolution of Motion-Capture in Sports

Most of the earliest motion-capture systems in sports were not very complex and required little computing power. Want to know how many miles an athlete ran in a given game? What their top or average speed was, or how often they performed certain basic actions? Anywhere from one to a half-dozen cameras, plus some specialized software, can answer these kinds of simple questions accurately enough to satisfy any realistic need.

When the queries get more complex, though, so does the technological burden. “There are all types of weird situations that happen in sports,” says Mike D’Auria, EVP of sports and technology partnerships with Genius who spent years with Second Spectrum, which has now been folded into the broader Genius Sports umbrella. “Players clump up, players pile on top of each other.”

That causes “occlusion,” as the industry terms it. Even with a dozen or so cameras, the right angle isn’t always visible to view entire plays. Historically, machine learning systems have filled in those gaps with what amounts to educated guesses about where uncaptured elements are likely to be. For many use cases, those types of guesses are fine. But once you’re in a situation where guessing isn’t good enough, like when game referees are relying on your technology to adjudicate a call on the field, a higher standard of performance has to be enforced.

Offside Rules: A Technological Challenge

Introduced in 1883 to prevent players from lurking near the opponent's goal, soccer’s offside rule is a glaring, often contentious example. Accurately calling a player offside requires knowing the precise moment the ball is played, plus whether an attacking player was positioned behind the opponent’s final defender at that instant. Prior semiautomated offside systems—like the one used at the 2022 FIFA World Cup and this summer's European Championships in Germany—use between 10 and 15 cameras and a sensor inside the ball to track a few dozen body points on each player. But they too often fall victim to occlusion and can't accurately parse these fleeting moments.

Dragon's Approach: iPhone Power and Machine Learning

Dragon, according to Genius, will initially use at least 28 iPhone cameras at every stadium in the Premier League. (More cameras may be used in certain stadiums throughout the year, the company says). The system uses the built-in cameras of iPhone 14 models and newer. The iPhones are housed in custom waterproof cases adorned with cooling fans and are connected to a power source. The team designed mounts that hold up to four iPhones clumped together.

Once the iPhones are positioned around the pitch, together they capture a constant stream of video from multiple angles. Camera mounts can be moved to change coverage zones in certain facilities, per Genius, but will typically be stationary during actual play to ensure proper coverage and avoid recalibration needs on the fly. This wealth of visuals apparently gives Dragon the ability to track between 7,000 and 10,000 points on each player at all times.

Using iPhone cameras, Dragon can track between 7,000 and 10,000 data points on each player at all times.

“You’re going from 30, 40, 50 data points on a player to, no, I’m actually going to track the contours of your body,” D’Auria says. Things like muscle mass, skeletal frame differences, and even gait—all of which can matter for über-precise offside calls—are recorded and can be analyzed in what amounts to a true virtual re-creation of actual events.

Dragon leverages the ability of iPhones to capture video in ultrahigh frame rates, mitigating tricky instances of occlusion that can obscure the precise kick point of the ball.

D’Auria offers a simple example: Watch some broadcast video of soccer balls being kicked, but slow the clips down enough so you watch the action progress frame by frame. “You will in many instances miss the kick point,” D’Auria says. “The kick point will be between two frames of video … You go from one frame where the ball is not on the foot yet to the next frame and the ball has already left the foot and gone in the other direction.”

Most broadcast video today is captured at 50 or 60 frames per second. Dragon can capture up to 200 frames per second, potentially reducing those gaps between frames by 75 percent. (The initial EPL system will be capped at 100 fps to balance latency, accuracy, and costs.) The system can auto-detect important impending events—such as a possible offside call—and scale up the frame rate of certain cameras temporarily, then scale back down when appropriate to save computing power.

Machine Intelligence and Contextual Understanding

Facilitating this automation is Dragon’s other key feature: A machine intelligence system running on the backend known internally as “object semantic mesh.” Utilizing Genius’ years of converting optical basketball data, this machine learning program has been trained on common soccer events or situations over several seasons. It’s not just capturing movements, it’s contextualizing them in real time—and in some cases even learning from them.

Don’t worry about a full takeover from our robot overlords, though. While both the EPL and Genius declined to provide specifics (some of which, including timing, are still being determined ahead of Dragon’s in-season launch), sources familiar with the setup confirmed that humans will make the final decision on all offside calls, with the assistance of these AI tools.

Why iPhones? Scalability, Power, and Cost

Given how cheap and powerful smartphones are, one has to ask why it took so long for anyone in sports to plumb this well.

Today’s iPhones are as powerful as the world’s greatest supercomputers were 20 years ago. Whereas other modern optical-tracking systems require expensive fiber-optic cables and servers to connect fancy cameras to computers tasked with managing the data being collected, today’s $1,000 smartphones can handle both those tasks largely alone.

The Premier League probably doesn’t feel the need to be frugal; it’s the richest league in the world’s most popular sport. But Genius says other major soccer leagues have shown interest in Dragon, and keeping costs down may be a higher priority in the future. Dragon’s ability to scale up or down ensures readiness for any budget or need. D’Auria says the team has tested a system with as few as 10 cameras or as many as 100 in a single venue.

“What we’ve started to do is modulate the answer to the number of cameras we need based on the problems we want to solve,” D’Auria says. If the problem to be solved is a system for detecting whether a player is offside, a few dozen well-positioned iPhones should do the trick.

The Dragon system can be used with with as few as 10 iPhone cameras or as many as 100 in a single venue.

“If a harder problem comes up in the future, it’s relatively easy for us to work on the install base, or the technical background that we have in a venue, and just go add 10, 20, 30, 40 different cameras,” he says. “Maybe we want to focus them on certain parts of the field or deploy them for specific purposes.”

This kind of scalability also brings to the table the concept of the “digital twin” in sports. By capturing streams of video and positioning data as a player moves on the field, that player can be re-created virtually—their movements, likeness, and hand gestures, all rendered digitally in real time. This is something that’s typically been possible with only the types of high-priced cameras and computer systems used in Hollywood and in video game creation.

If digital twins can be created in sports, their uses go beyond officiating. Broadcasters can use them in digital overlays that show real-time stats, or in virtual reality, so you can watch a game inside your VR headset.

Soccer is merely the first playground for this tech. Just about any sport can draw value from digital twin creation, and Genius hopes to make inroads in basketball and American football soon.

The Big Question: Can Dragon Fix VAR?

But as intriguing as a soccer digital twin sounds, can Dragon actually remedy the game’s offside-detection issues? After all, constant issues with prior VAR systems have inspired no confidence in motion-capture technology among soccer’s main stakeholders nor with fans.

Genius says it’s been testing Dragon for several years, both in the EPL and several other venues, in multiple formats. The company employs several internal analysts who project tracking data into a video format, then go frame by frame alongside broadcast video to detect any discrepancies. This allows the team to continuously retrain its models until such errors are, in theory, eliminated. Genius analysts consider this the foundational testing level, a baseline on top of which others are layered.

Dragon’s inputs have been compared side by side with VAR and detection systems to validate their basic accuracy. They’ve also been validated manually: Engineers spent long hours with various sport stakeholders (coaches, players, management), running through complex plays and confirming that the system’s outputs make sense. Every client considering use of Dragon also has internal teams who scrutinize the system and validate its outputs.

“We’ve done this with groups like FIFA, where we’ve gone through extensive tests,” D’Auria says. “The Dragon system is FIFA-validated. They’ll do tests where players wear a Vicon [motion-capture] system, and we track them, and they compare datasets and look for errors. We’ve gone through five or six machinations of this.”

It should be noted that both Genius and EPL representatives declined to provide any specific testing information or results to WIRED, stating that, despite evaluating the iPhone system side by side with VAR, comparisons to prior motion-capture systems are tricky due to order-of-magnitude differences in the quantity and quality of data being created. Interestingly, again both the EPL and Genius refused to give any indication on how much more accurate its smartphone tech is compared with VAR.

Of course, the real evaluation will be made by fans and players, who will need to see Dragon in action to believe it actually makes a difference. The last few years of VAR absurdity have left an understandably bad taste in many mouths where optical tracking is concerned.

But when that first semiautomated offside call comes in this season in the UK, remember that this isn’t just the same old setup in different wrapping. It’s the next generation of motion capture, one that stakeholders across sports and in the AI community will be watching closely. Fans won’t have much, if any, tolerance for further issues with motion-capture-based systems. Genius and the EPL are confident they’re up to the challenge. We shall see. Let the games begin.