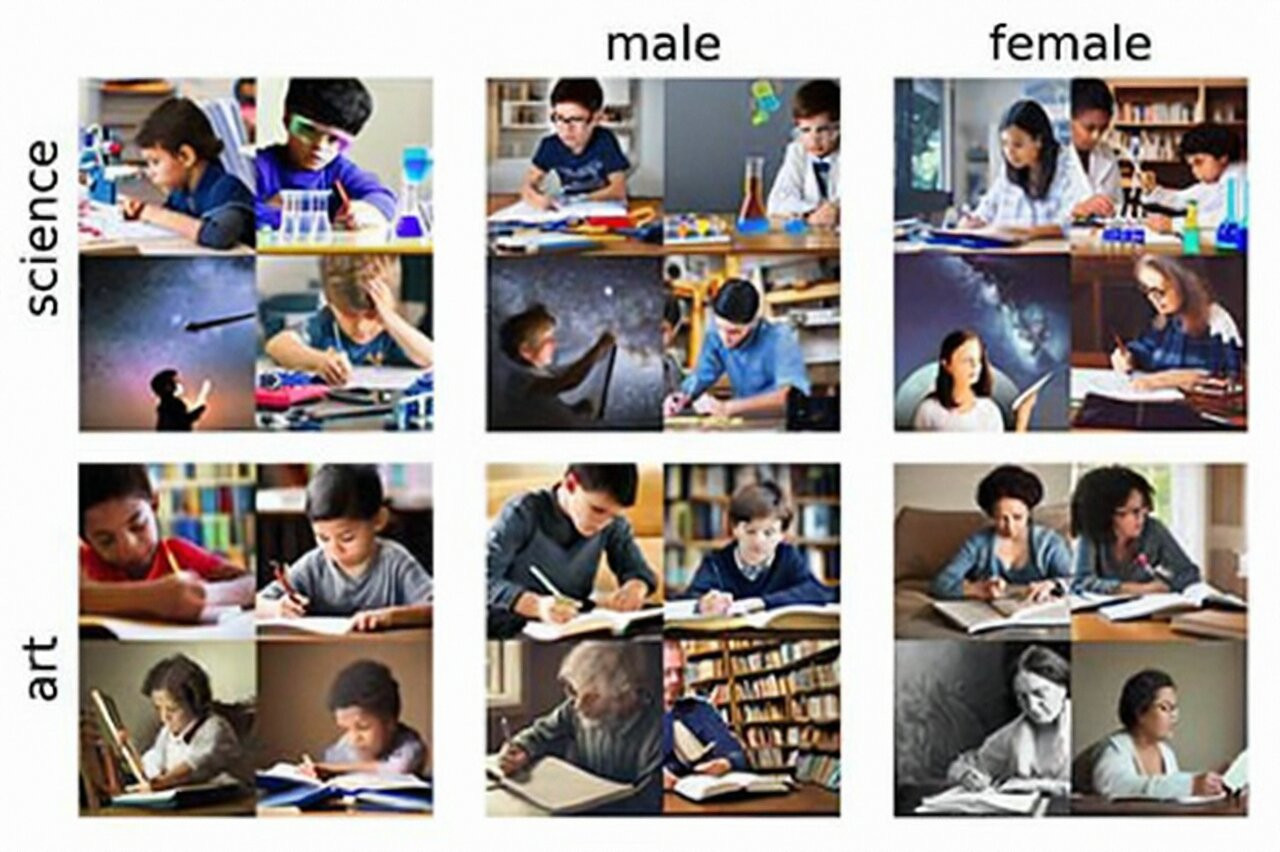

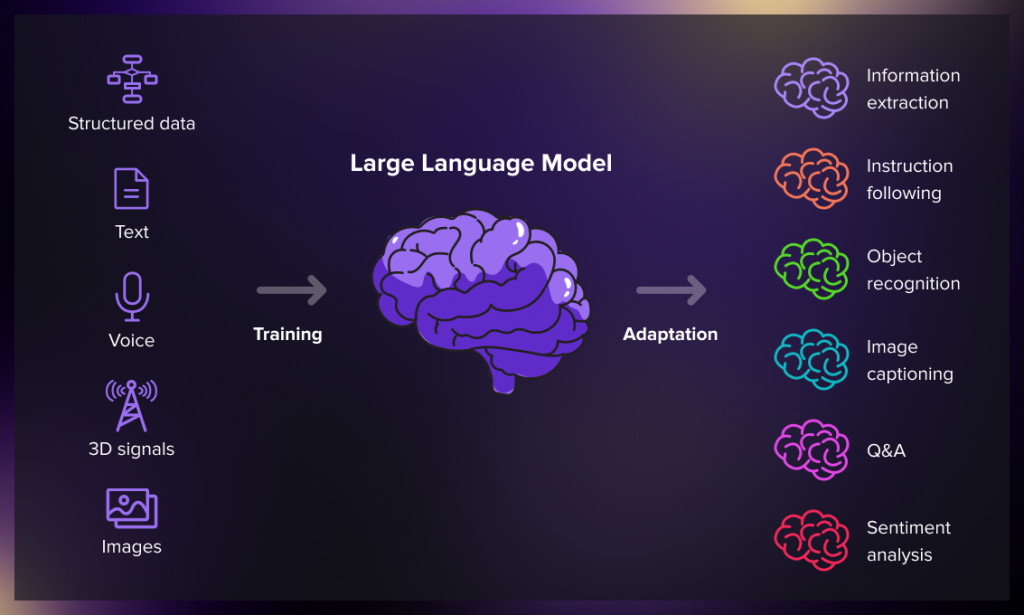

The use cases of generative AI large language models have significantly increased. From OpenAI, Microsoft’s Co-Pilot, Perplexity, and Google’s Gemini – data is getting faster, more adaptive, and increasingly available. As GenAI becomes more openly accessible, what can be done to mitigate bias? Is culturally informed AI possible?

AI is here to stay. That is clear. What is unclear is how the technology will impact the ways we learn and understand the world around us. Generative AI and large language models are under constant improvement and change. Since its release in 2021, ChatGPT has fostered a surge in GenAI use, its prevalence leading to new needs and understanding of the algorithms that make it easy to use.

Prior to its explosion, Experts like Ruha Benjamin of Princeton call into question the ways that culture intersects with technology, specifically outlining the risks associated with widespread and rapid use of GenAI and learning language models. The question becomes, what does this mean for us in the channel ecosystem as we use GenAI tools while working toward being an inclusive leader?

Unpacking Bias

The bedrock of building sustainable inclusive leadership is understanding and unpacking bias. Individually, culturally, but most importantly organizationally. Understanding where bias lies within policy and structure is fundamental to building a culture of equity and belonging. And, as Dr. Ashley Goodwin-Lowe of Ingram Micro continues to remind us – belonging is good for business.

So, if belonging is good for business and inclusive leaders who mitigate policy bias are the bedrock of strong organizations, we all have a responsibility to understand where, when, and how bias creeps into our administrative decision making. Specifically, understanding how bias finds its way into product creation.

Where Bias Can Occur in AI Development

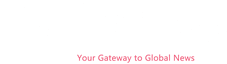

As you can tell, I have been thinking a lot about this and like any good scholar, I like to do my research. My search took me to the digital library, specifically in the Chapman University AI Hub. According to the AI Hub, there are four stages where bias can occur within AI development: data collection, data labeling, model training, and deployment. Simply put, all stages of the AI pipeline can result in biased outputs, most of which are not intentional or malicious.

I think there’s a saying for this: bad data in means bad data out. The solution is to be intentional with the development process. Data that is not diverse or representative can lead to bias. Labeling data in ways that are unclear can lead to bias. Not designing the AI model to handle diverse inputs can produce bias. And lastly, a lack of monitoring after implementation and deployment can lead to continued bias.

Without intentionality in design, bias can and will make its way into the most well-meaning, well-developed algorithm. How do we as inclusive leaders use GenAI responsibly knowing bias is a potentially regular occurrence?

ChatBlackGPT: An Intentional Approach

Enter developer Erin Reddick, founder and CEO of ChatBlackGPT. Launched on Juneteenth (June 19), 2024, the project’s mission focuses on amplifying Black voices and experiences. “We aim to ensure our AI reflects the rich diversity and cultural significance of the Black community,” Reddick (pictured above) said in a statement obtained by CRN. The OpenAI plugin, now in Beta, intentionally focuses on cultural representation, providing a depth of resources and knowledge for its users.

“We know the fast growth of GenAI models has led to quick decisions which may seem harmless but can lead to bias and the erasure of Black experiences– people of color, because of a lack of cultural context and intention,” said Erica Shoemate, independent policy advisor to ChatBlackGPT, in an interview.

“It’s important to do this now, from the beginning.”

The Inclusive Leadership Newsletter is a must-read for news, tips, and strategies focused on advancing successful diversity, equity, and inclusion initiatives in technology and across the IT channel. Subscribe today!