Dozens of nations around the world are hurrying legislation to regulate the design and use of artificial intelligence. Ahead of most, the European Union just passed a comprehensive AI Act. Its stringent restrictions will come into force over the next two years. Many other nations are not far behind. Global corporate players are already lamenting the resulting regulatory fragmentation, suggesting this will deprive users of valuable services or at the very least increase costs that ultimately consumers will have to pay for. They aren’t wrong. Regulatory diversity does lead to higher transaction costs. But their innocent-sounding warning on behalf of the world’s consumers is not only a bit self-serving. It’s also analytically flawed.

If AI is the transformational technology that will generate huge windfalls and reconfigure our economies, perhaps even society as we know it, you can see why regulators at all levels want a piece of the action. But for many nations, especially ones with rapidly growing economic prowess, the way the internet has been regulated over the past three decades serves as a cautionary tale.

Initially, the internet’s core governance tasks of managing IP numbers and domain names—the internet’s “name space”—were in U.S. hands. When the internet turned into a global phenomenon, this became untenable. But the tasks weren’t taken over by a subunit of the United Nations or the International Telecommunication Union. Rather, the role was taken on by the Internet Corporation for Assigned Names and Numbers (ICANN), a purpose-built California-based nonprofit organization that then went through repeated fundamental changes of its internal governance regimes. BRICS members, many in the global south, and others accused the United States of having created ICANN to cement its power over the core of the global internet.

At one of a series of international diplomatic gatherings on the issue, the EU tried to get ICANN to commit to fundamental rights in its governance. But the United States teamed up with China and Russia to sink the proposal. To explain U.S. opposition, one needs to look at internet governance through a realist’s lens of power: The internet holds too much significance for U.S interests. Keeping name space management at ICANN, unencumbered by any substantive constraints, ensures the U.S. continuing levers of influence.

The AI Power Grab: A New Global Game

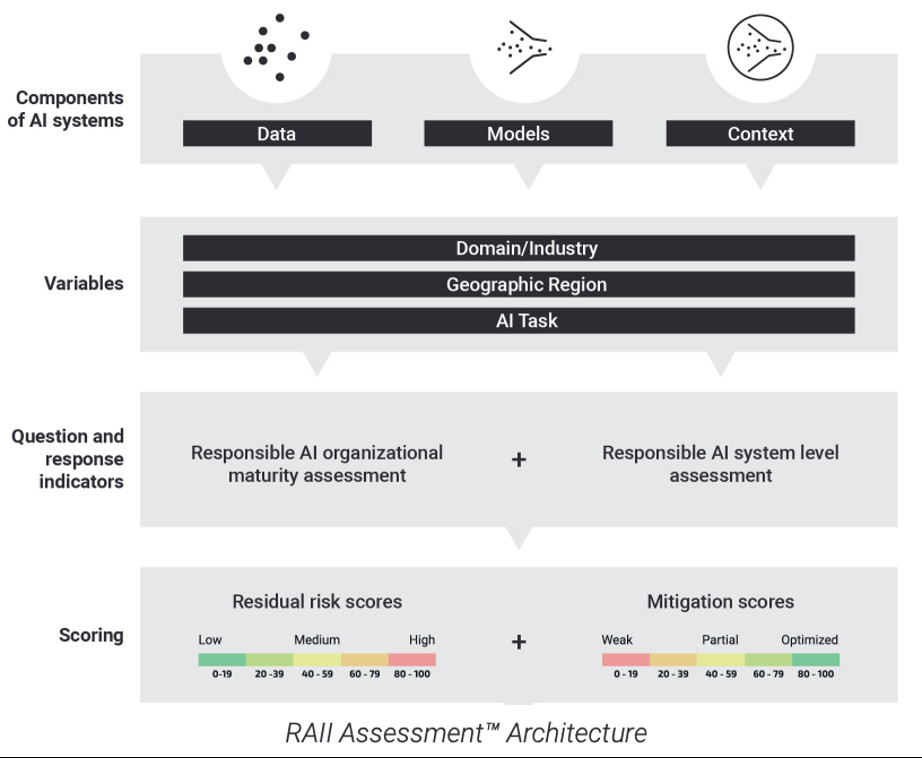

With AI today taking over in importance from the internet, nations are acutely aware that now is the time to secure power and influence through AI oversight. The result isn’t a simple race to regulate. More complex dynamics are at play. For instance, Chinese support to anchor AI governance at the U.N. has been described as an attempt to constrain the current Western dominance in both AI technology and AI regulation. Conversely, Washington’s reluctance to involve international organizations may be about keeping governance of the key technology of our times close to home.

Regulating AI is made more important and more difficult because of the way AI’s core features work and the commercial landscape that results from it. Training AI takes a lot of data—and access to sufficient training data is severely constrained. Training AI can be done through data that’s freely accessible online, but that risks complex copyright and privacy lawsuits. Or it can take place at the small number of very large digital platform companies that, through their dominant digital services, ingest sufficient mountains of training data.

For instance, Alphabet, Google’s parent company, can do its own AI training, thanks to the billions of data points that Google collects every day. Similarly, Meta can train its model through data gleaned from Facebook, Instagram, and WhatsApp. Meanwhile, Microsoft has teamed up with OpenAI through an investment worth billions of dollars. Alphabet, Meta, Microsoft are U.S. companies. The companies that can’t train their AI models due to the lack of data access sit elsewhere: in Europe and in the global south, with perhaps the exception of China. This makes for a very uneven distribution of AI providers, most of which are either directly owned or have strong links to large platform companies.

The West Coast Shapes Global Decision-Making

This unevenness translates into similarly skewed economics. Many businesses and organizations around the world need and want to use AI but lack AI models themselves. Instead, they purchase use of AI models from the few large providers. The result is that the producers of AI are mostly located on the U.S. West Coast, while users of AI are dispersed around the world.

This mirrors the situation on the internet, with a small number of U.S. platform providers and billions of worldwide users. But unlike with social media, where user-generated content is relatively diverse, such centralized AI provision not only amasses most profits on the U.S. West Coast but, far more importantly, provides AI-generated answers to billions of users based on AI models that have been trained on data lacking global diversity. Or, to put it bluntly: West Coast-generated answers shape global decision-making.

This means that issues of AI governance are arguably far more important than those of internet governance. And the resulting power grab is far more complicated than a competition among nations because organizations and institutions within them are also jockeying for influence.

Navigating the Labyrinth of AI Regulation

Some nations have formed specialized AI governance institutions cutting across conventional regulatory boundaries to facilitate the rollout and enforcement of AI laws. But that has not eliminated the role that already-existing institutions play, from antitrust regulators, such as the Federal Trade Commission in the United States, concerned with the concentration of training data for AI to data protection boards worrying about the privacy of personal information used to train AI, not to mention courts called to adjudicate intellectual property claims over AI models trained on openly accessible yet mostly copyrighted materials. New regulatory and enforcement institutions compete with existing ones; institutions from one subject area with those in others. Add to this the supra- and intranational layers already mentioned, and you get a sense of the complex regulatory land grab to regulate AI that’s currently underway.

Importantly, appreciating this dynamic does not necessitate assuming any of these regulators behave irrationally (although surely some of them may); it unfolds when the individual regulators act to enhance their power—a perfectly rational response to the emergence of a new phenomenon in need of regulation.

Is Regulatory Diversity a Need or a Nuisance?

Examining AI regulation from a realist’s perspective of power helps understand the multitude of approaches and institutions that are engaged. This is unlikely to change soon, but is the regulatory diversity we see unfolding a need—or a nuisance?

Regulatory diversity is undoubtedly costly. Sustaining a multitude of regulatory institutions is expensive for society, and if these institutions are engaged in turf wars, this is even more so. Far worse, companies and individuals must comply with a plentitude of rules whenever their activities cross jurisdictional boundaries (which happens all the time online). The resulting transaction costs are inefficient, leading to higher prices and, potentially, lower service quality. In contrast, a homogenous regulatory regime enables companies to reap economies of scale and scope. Given the significance of comprehensive training data and the economic reality that training an AI model is expensive but querying it is cheap, scale and scope effects are hugely beneficial for AI services. This suggests we should aim for reducing regulatory diversity to reap substantial efficiency gains, following the lead of decades of harmonizing economic regulations across institutional and jurisdictional boundaries.

The benefits of harmonization, however, are founded on a key premise: that we have identified the right regulatory goal (or goals) as well as the appropriate regulatory mechanisms to achieve this goal. The push for efficiency through regulatory homogeneity works if our regulatory path forward is sufficiently obvious. For regulating AI, the presence of this premise is unclear. When it comes to regulating AI, we are still in the concept and search phase, despite much public rhetoric to the contrary. While some argue that harmonizing AI regulations globally could prevent a race to the bottom in terms of safety and ethical standards, there is no consensus on what exactly the goal of regulating AI ought to be; many AI regulations strive to achieve multiple goals that are complexly interdependent. Nor have we identified clearly and unambiguously the most appropriate mechanisms by which to achieve these goals.

A Case for Experimentation and Learning

What is needed far more in such a phase of regulatory uncertainty is rule diversity and the regulatory experimentation that ensues. If we don’t yet know what we need, we must try many different strategies to find the most suitable one. Experimentation is a necessary step but is not sufficient. The key to success is that we learn from our experiments. Otherwise, rule diversity and all the trials that follow will be in vain.

The rationale for learning from one another in phases of regulatory experimentation is rather obvious. What is less so is how to achieve it practically. Several scholars of international political economy have highlighted the importance of learning in contexts of regulatory interdependence, especially when regulatory ends and means are not yet well defined; they point to the importance of open channels of communication and innovative contexts of such learning. Past cases include the regulation of ozone-depleting chemicals, new drug approval and monitoring, and genetically modified crops.

But most institutions of regulatory harmonization, from the intranational to the supranational level, have been established and designed to introduce regulators to the single best solution that has already been identified, rather than enabling a cacophony of regulatory strategies from which to learn. Institutionally, these regulatory bodies are ill-equipped to facilitate the kind of experimentation and learning that currently is most needed.

This puts us into a bind: If we want to enable regulatory experimentation and learning, we need institutions to facilitate that. But the very institutions at our disposal—such as the post-World War II Bretton Woods setup that culminated in the establishment of the World Trade Organization and championed global harmonization of trade regulations—are ill-suited to do that. Whether these institutions, so focused on a particular set solution, can be reconfigured in time to facilitate such open-ended learning is an open question. After all, we are not talking about fine-tuning existing processes but rather replacing an institution’s very aim and means and reshaping its processes and institutional working accordingly. Perhaps we need different institutions altogether to aid in this experimentation and learning—or, at the very least, to ensure institutions are willing to fundamentally reconfigure existing organizations. The framework for governing the Volta River Basin in West Africa may be an example of the former strategy (and so is Wikipedia, if you prefer a more digital and less government-centric example), while the repeated (and ultimately successful) restructuring of ICANN after the initial failed start may be a case in point of the latter.

There is no proven blueprint, though. Experimentation and learning at the substantive regulatory level entail experimentation and learning at the institutional level as well. Collecting good ideas, sharing them with others, and learning from the experiences of others may be costly but far likely to be more successful in the long term.

The Future of AI Regulation: Embracing Experimentation

The challenge of regulating AI is not simply about finding the right rules. It is about building the right institutional structures to support a dynamic and adaptive approach to governance. In a world of rapid technological change, the only constant is the need for continuous experimentation and learning. The global community must resist the temptation to rush into a homogenous regulatory regime that may stifle innovation and fail to adequately address the unique challenges of AI. Instead, we must embrace a more flexible and collaborative model that allows for experimentation, sharing best practices, and learning from mistakes. Only then can we hope to harness the potential of AI while mitigating its risks and ensuring a more just and equitable future for all.