Consider this a word to the wise. Just as the recent developments in artificial intelligence have and will continue to impact us all, quantum computing will amplify that and take us to places we can only imagine. This is the next truly disruptive technology on the horizon. It is not just about incremental improvements in computer hardware but a breakthrough in the way computers work, how data is processed and handled, and the enablement to fulfill the aspirations and dreams of AI. My goal is to provide as many details as practical and introduce the fundamental “ingredients” of what is to come.

In short, quantum computing is an innovation that applies the laws of quantum mechanics to simulate and solve complex problems that are too difficult for the current genre of classical computers. It is more about science than hardware technology per se. It is still so new that most of us can’t define or properly understand it, but it might (many experts say will) be the next (currently obscure) technology to have a seismic effect on businesses of all types including medical, scientific, engineering, etc.

A key turning point in the history of quantum computing was reached in 2019 with the attainment of “quantum supremacy.” This refers to the point at which a quantum computer became feasible and can (when fully developed) outperform the most powerful classical supercomputers. This achievement shows how Quantum computers have the ability to tackle complicated problems and do so in orders of magnitude faster than classical computers.

Let me say at the outset that the current field of quantum computers isn’t quite ready for prime time. The technology is in the early stages. McKinsey among others have estimated that there will be 5,000 quantum computers operational by 2030 and that the hardware and software necessary for handling the most complex problems will follow after that.

At first glance, this appears to give us all some breathing room to acclimate but keep in mind that in today’s world prognosticators and pundits don’t have a very good track record when it comes to forecasting technological advances, especially ones with such a huge upside. For one example, look at the forecasts versus the reality of the expansion of AI.

The concept of forecasting the future of technological advances in computing dates back to 1965 when Gordon E. Moore, the co-founder of Intel, made an observation that became popularly known as Moore’s Law. Moore’s Law states that the number of transistors on a microchip doubles about every two years with a minimal cost increase. Another tenet of Moore’s Law says that the growth of microprocessors is exponential. Over the years it has been all about making the chips smaller and packing more transistors in a given space. Many have expanded this “axiom” to say that technology writ large “doubles” every two years and is exponential.

Today, the general consensus is that we are now reaching the physical limits of Moore’s Law, and it will be fully reached at some point in the 2020s. This is not new thinking. In a 2005 interview, Moore himself admitted that “…the fact that materials are made of atoms is the fundamental limitation and it’s not that far away…We’re pushing up against some fairly fundamental limits so one of these days we’re going to have to stop making things smaller.” This means that if we want to progress beyond current limitations, we will need to find ways to do things that are outside of the existing technological paradigms.

Since we are approaching the currently known limits in traditional computing, this is where necessity becomes the mother of invention. If we look at AI and its various iterations we need more (pun intended) computing power to take it to the next level. The need/demand will fuel developments in quantum computing that will exceed forecasts. The takeaway is that organizations need to start thinking now about where they might leverage the technology to solve real-world business problems.

Fear not, quantum computing is not going to totally replace conventional computers. Subject matter experts and researchers alike explain that typical businesses with common (small to moderate-sized problems) will not benefit from quantum computing. However, those trying to solve large problems with exponential algorithmic gains and those that need to process very large datasets will derive significant advantages. As one pioneer in the field opines, “Quantum computing is not going to be better for everything, just for some things.” I would add to this that the applications requiring “better for some things” is huge. Now, let’s get to the point of what it is, how it works and where it is at.

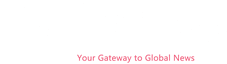

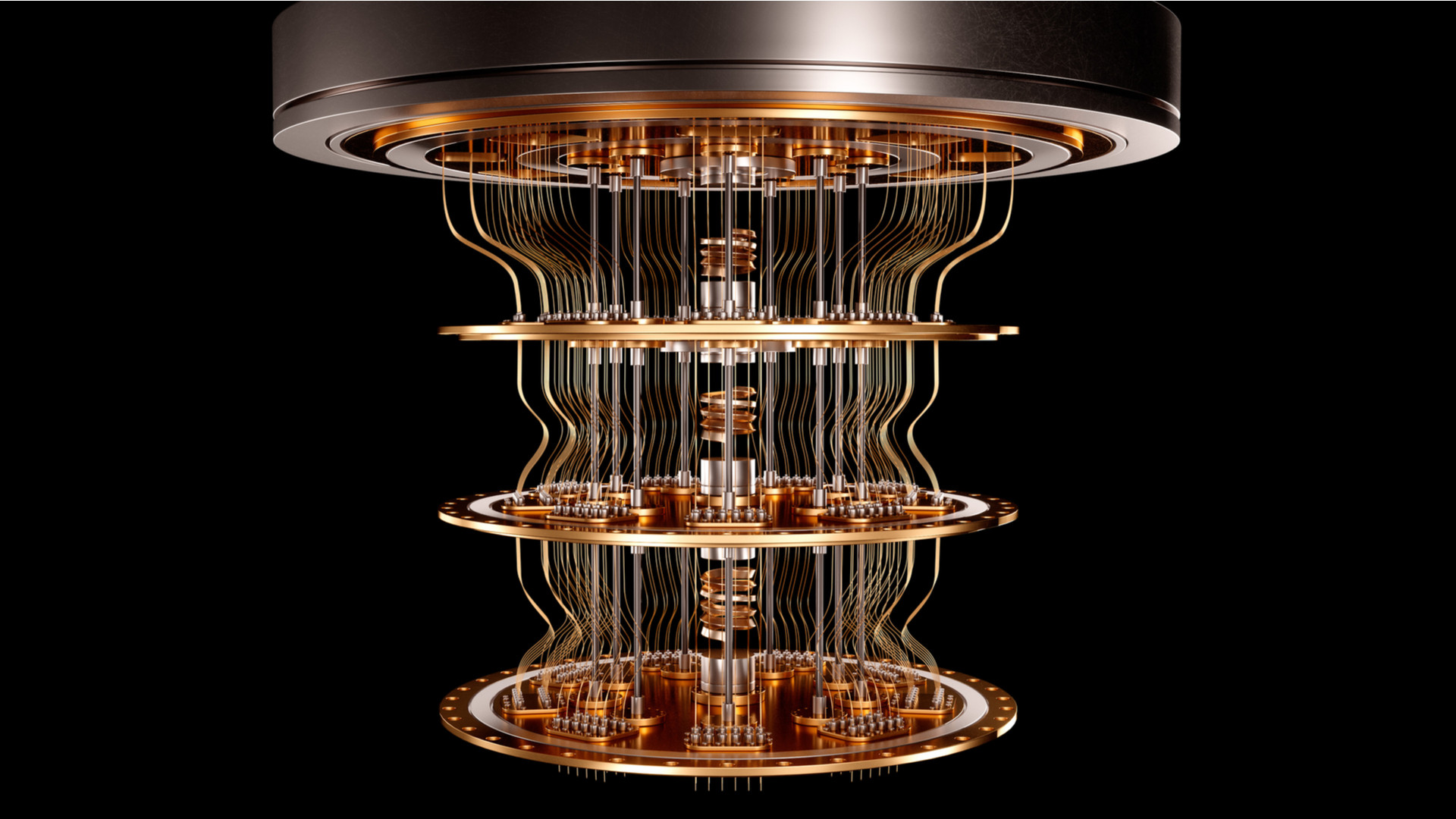

Quantum computing is a cutting-edge computer science harnessing quantum mechanics to solve problems orders of magnitude faster and beyond the ability of even the most powerful classical computers. When fully developed, a quantum computer will be able to address computational challenges in a matter of minutes that might take a classical computer thousands of years to complete. Yes, you heard that right. The technology includes both quantum hardware and quantum algorithms. Fair warning — here comes the tutorial part with an informational assist from IBM, Google, Amazon and Microsoft plus a few others to keep us on track.

So take a deep breath and let us dive in!

Quantum mechanics involves the study of subatomic particles and their unique and fundamental natural principles. Quantum computers harness these to compute “probabilistically and quantum mechanically.” Think in terms of sorting huge amounts of data and cross-referencing it and sorting it to find the most probable outcomes. When discussing quantum computers, it is important to understand that quantum mechanics is not like traditional physics. As one physicist points out, “The behaviors of quantum particles often appear to be bizarre, counterintuitive or even impossible yet the laws of quantum mechanics dictate the order of the natural world.” To understand quantum computing it requires familiarity with four overriding principles of quantum mechanics:

Classic computers generally perform calculations sequentially, storing data by using binary bits of information with a discrete number of possible states, 0 or 1. When combined into binary code and manipulated by using logic parameters, computers can be used to create everything from simple operating systems to the currently most advanced supercomputing calculations. The limitation lies in the discrete number of possible states being 0 or 1. We can get whatever we want in this binary world, but the downside is that it will take a lot of time to arrive…Think about the end game and consider the demand and value of time when you think about the push to get quantum computers where they need to be as soon as possible.

Quantum computers function similarly to classical computers, but instead of single bits of information, they use qubits. Qubits are special systems that act like subatomic particles made of atoms, superconducting electric circuits or other systems that result in a set of amplitudes applied to both 0 and 1, rather than just two states (0 or 1). This complicated quantum mechanical concept is called a superposition as noted earlier. Through a process called quantum entanglement, those amplitudes can apply to multiple qubits simultaneously.

Photo credits: Production Perig / Stock.adobe.com

A qubit can be “weighted” as a combination of zero and one at the same time. When combined, qubits in superposition can literally scale exponentially. Two qubits can store four bits of information, three can store eight, and four can store twelve on so on. Think in terms of subatomic particles, the smallest known building blocks of the physical universe. Generally, qubits are created by manipulating and measuring theses quantum particles such as photons, electrons, trapped ions, and atoms.

To manipulate such particles, qubits must be kept extremely cold to minimize noise and prevent them from providing inaccurate results or errors resulting from unintended decoherence. There are many different types of qubits used in quantum computing today, with some better suited for different types of tasks. One type does not fit all applications. According to experts involved in the technology, a few of the more common types of qubits already in use today are as follows:

The team at Innovation and Research point out that no approach has yet emerged to evaluate and compare qubit technologies that can produce a perfect quantum computer. However, they did find six key considerations and challenges for evaluating them:

Quantum processors do not perform mathematical equations the same way classical computers do. Unlike classical computers that must compute every step of a complicated calculation, quantum circuits made from logical qubits can process enormous datasets simultaneously with different operations, improving efficiency by many orders of magnitude for certain problems.

Quantum computers operate probabilistically and find the most likely solution to a problem, while traditional computers are deterministic, requiring laborious computations to determine a specific singular outcome of any inputs.

One of the leaders in quantum computing IBM says that “Describing the behaviors of quantum particles presents a unique challenge. Most common-sense paradigms for the natural world lack the vocabulary to communicate the surprising behaviors of quantum particles.” They offer the following to help us connect the dots.

To understand quantum computing, it is important to understand how the key principals of quantum mechanics mentioned earlier works in quantum computing:

Quantum computers are expensive and require a deep understanding of quantum mechanics, computer science, and engineering. Finding professionals with expertise in all three is difficult, but the gap in availability versus demand for this talent will be filled. Also, the environments in which they operate are “extreme” so there are challenges to overcome but there are no apparent “deal breakers.”

Despite the challenges facing quantum computing, its future looks promising. It will become a fundamental tool for scientific research, making it easier to solve problems that were previously impossible. The battles for this science and technology supremacy are heating up. Technology giants such as IBM, Google, Microsoft, Intel and Amazon AWS Bracket along with overseas companies like the Alibaba Group and Baidu out of China and EVIDEN out of France are investing large sums of money in the field. Governments are also beginning to see the strategic importance of quantum computing, resulting in increased funding and collaborative efforts.

This is complex to say the least and a lot to absorb even on the surface but for those who take the time it provides a view into the not-too-distant future. Whether we understand the science or not most of you will immediately connect the dots and see where the interests of AI and quantum computing intersect and where this is headed. Yes, there are still some challenges to overcome, but experts agree there is nothing that cannot be overcome…so stay tuned and buckle up!

Alan C. Brawn, CTS, DSCE, DSDE, DSDE, DSNE, DSSP, ISF-C, is principal at Brawn Consulting.