On Tuesday, Aug. 27 the Third Circuit Appellate Court of the United States, based in Philadelphia, appealed a district court’s decision in a case against the social media platform TikTok by re-examining and redefining a long-standing law on internet publishers. TikTok will now be brought back into court over dangerous content published on its site.

The district court overseeing the suit originally stated that, “In thus promoting the work of others, [TikTok] published that work — exactly the activity Section 230 shields from liability.” The court found that TikTok was not to be held directly responsible, that is until the Third Circuit not only dismissed the court’s ruling, but also reinterpreted Section 230 of the federal law. The law, Section 230 of the Communications Decency Act was ratified in 1996, and has had a long legal precedent even as social media companies grow and become more complex than simple websites and hyperlinks.

The specific section stated that platforms were not to be held responsible for the content hosted or shared on their platforms. The argument in favor of this law when it was written was that it promoted free speech and limited what platforms could regulate users from, if said content did violate the platforms own private terms of service.

Electronic Frontier Foundation (EFF) is a non-profit digital rights group that describes its goals as “leading technologists, activists, and attorneys in our efforts to defend free speech online.” A strong defender of the CDA and Section 230 since its inception, they stand strongly that it protects the intermediaries and platforms that internet users rely on. “Section 230 allows for web operators, large and small, to moderate user speech and content as they see fit,” the EFF states in its explanation for why the bill has been a net good since the Clinton administration.

After nearly 30 years, however, The Third Circuit has deliberated on the circumstances of a platform’s liability when it comes to the algorithms that they use.

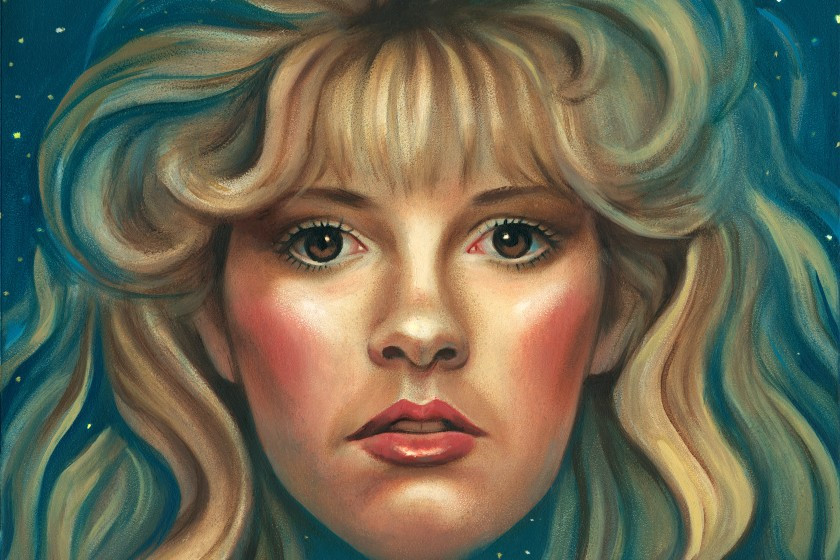

Dr. Andy Famiglietti, Associate Professor of the English Department at West Chester University, gave The Quad some insight into this decision’s implications. “What happened to Nylah Anderson is utterly tragic, and the ‘For You’ algorithm does seem to have played a role in her consuming ‘Blackout Challenge’ content. It isn’t the only case of problematic content being suggested by [an] algorithmic process.”

Famiglietti told The Quad that some legal experts are concerned over the implications that if Section 230 does not protect platforms from liability regarding algorithmically selected content, then virtually everything on the internet is able to be used against a platform or website in a lawsuit and would likely make them liable for everything on their sites.

Critics of the law have been looking forward to a decision such as this for years. “[A]t the very least, this decision forces the issue,” wrote Matt Stoller on his Substack TheBigNewsletter. “And that means the gravy train, where big tech has been able to pollute our society without any responsibility, is likely ending.”

Stoller is the Research Director of the American Economic Liberties Project (AELP), a non-profit organization that pushes strictly for antitrust legislation targeted at corporations. AELP is funded by billionaire and founder of eBay, Pierre Morad Omidyar. Stoller and his organization’s position on the Third Circuit’s ruling is one of positivity and optimism. Some, however, worry about the freedom and safety of users online with this decision and how it may affect future law.

Stoller brings up that the Third Circuit decision made the distinction that media apps and platforms should not be viewed as neutral publishers of content, but rather as distributors that make editorial decisions on what content can stay, gets boosted or gets suppressed.

Famiglietti states, however, this ruling could potentially have adverse effects on content curation via algorithms, stating that platforms abandoning the use of algorithmic filtration could end up making harmful content such as the “Blackout Challenge” more prominent, and ultimately could make online spaces more hostile and filled with unregulated hate speech.

“I think there’s a lot of concern about algorithmic content suggestion,” Flamiglietti said in closing, “but…also a lot of concern that this Third Circuit decision is not the best way to address issues with that practice. It will be interesting to see what happens as this decision gets reviewed further!”

Gaven Mitchell is a fourth-year History major with a minor in Journalism. [email protected]